How reproducible is the response to +2°C Arctic sea-ice loss in a large ensemble of simulations?

How does a rapidly warming Arctic affect mid-latitude climate now and future years? This question has long been a focus of research for climate scientists. The abundant literature on this subject shows contrasting results, in part due to differences in protocols when modeling the climate response to Arctic sea-ice loss. The Polar Amplification Model Intercomparison Project, PAMIP, is a new international collaboration that provides guidelines for running climate simulations with imposed sea-ice loss at +2°C of global warming relative to the pre-industrial era (a short-time horizon unfortunately). The first results from PAMIP show it is already successful at producing modeling results that are in better agreement, i.e., a common protocol reduces uncertainties in the large-scale atmospheric response. For instance, most models find a weakening of the westerlies on the poleward flank of the eddy-driven jets in subpolar latitudes (although generally with a weak amplitude).

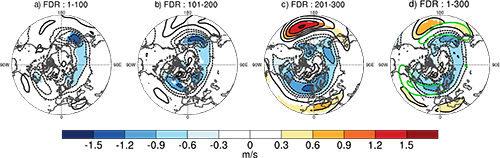

In addition to protocol and model physics, the chaotic noise of atmospheric variability (internal variability), is another major source of uncertainty in the response to sea-ice loss. A common practice is to run large ensembles of simulations that smooth out the noise when averaged together. PAMIP recommends running at least 100 ensemble members for the experiments. However, in a recent study by Peings et al., the authors point out that having 100 ensemble members in fact does not guarantee a robust assessment of the response. After extending runs to 300 ensemble members, they found substantial variations in the remote response, as shown in the figure below, showing the response in the 700 hPa zonal wind.

DJFM response of U700 in fully-coupled runs of SC-WACCM4, for ensemble members: a) 1-100, b) 101-200, c) 201-300, d) 1-300 (ensemble mean). Shading: Student t-test + False Discovery Rate test. Climatology is shown in green on d. From Peings et al. (2021).

In this case, members 201-300 exhibit a much larger response than members 1-100, although each 100-member subset represents exactly the same experiment (but with different atmospheric initial conditions for each ensemble member). Such inconsistency in the response, even after applying a False Discovery Rate correction, is problematic. Divergence in results can be falsely attributed to structural differences among models when they are still largely influenced by internal variability. It is worth investigating whether this uncertainty also exists for other low signal to noise climate responses in large ensemble simulations. For future studies, the authors propose a routine called the Consistency Discovery Rate (CDR) test that controls for reproducibility of the anomalies among the ensemble of runs. By discarding non-consistent (i.e., non-reproducible) anomalies, it allows for a more robust assessment of the response to a given climatic forcing in ensembles of climate simulations.

Are 100 Ensemble Members Enough to Capture the Remote Atmospheric Response to +2°C Arctic Sea Ice Loss? (Journal of Climate)

Topics

- Arctic

- Internal Variability

- Modeling